The Glimmer VM: Boots Fast and Stays Fast

Great web applications boot up fast and stay silky smooth once they've started.

In other contexts, applications can choose quick loading or responsiveness once they've loaded. Great games can get away with a long loading bar as long as they react instantly once the gamer gets going. In contrast, scripting languages like Ruby, Python or Bash optimize for instant boot, but run their programs more slowly.

To optimize for boot time, scripting languages use interpreters and avoid expensive compilation steps. To optimize for responsiveness, games pre-fill their caches and do as much work up front as they can get away with. The web demands that we do both at the same time: users coming from search results pages must see content within a second on modern devices, but also demand 60fps once the application gets going.

Over the years, web browsers have responded to this requirements with more JIT tiers and interpreters for initial startup, while at the same time focusing on improvements to the speed of the rendering pipeline and the predictability of scheduling.

The rendering engines that come with front-end JavaScript frameworks have a similar problem: how do we get content onto the screen as fast as possible, while still maintaining very fast updating performance?

Ember.js Performance

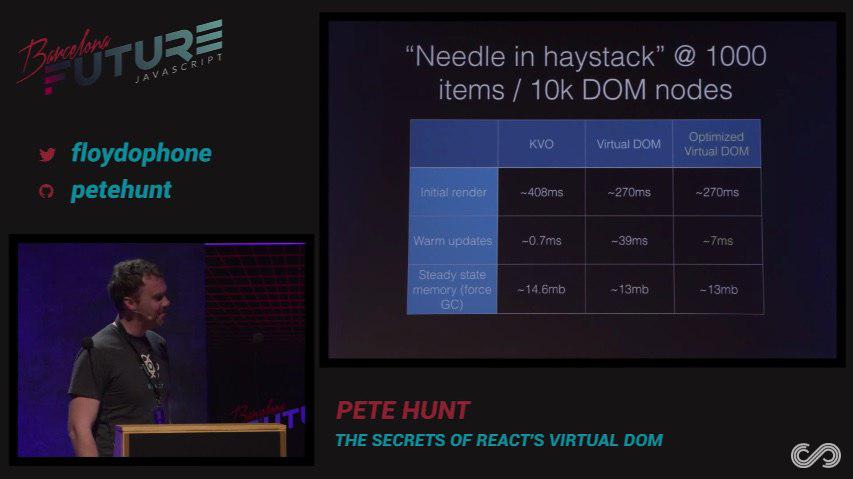

A few years ago, Ember.js was slow to render initially, but quite fast at updates. In this early React talk, Pete Hunt showed that Ember (called "KVO" in this slide) manages to beat React (even with shouldComponentUpdate!) at updating the DOM when only a single input changed.

While the Glimmer VM clearly needed to give Ember improvements on initial render performance, we also had a unique constraint in the framework space: Ember could not afford to regress on our already-quite-good updating performance.

Getting initial render performance competitive with the Virtual DOM approach and updating performance competitive with the KVO ("Key-Value Observing") approach proved to be quite difficult.

To improve initial render performance without sacrificing updating performance, we use two general approaches: static optimizations and dynamic optimizations.

Static Optimizations

When we started working on optimizing Glimmer, we noticed that many of the benchmarks that people use to compare frameworks include very little HTML content and are only comparing the performance of updating dynamic content ("curlies").

In contrast, looking at our own real-world applications, we found that templates are full of HTML that never changes: nested tags, attributes, classes, and static text. Components that look visually small can often have heaps of markup behind the scenes.

Because of this, we put a lot of effort into optimizing the cost of static content, during initial rendering, but more importantly during updates.

Consider a template like this, using Bootstrap markup for navigation:

<ul class="nav nav-tabs">

<li role="presentation" class="active"><a href="/home">{{home}}</a></li>

<li role="presentation"><a href="/profile">{{profile}}</a></li>

<li role="presentation"><a href="/messages">{{messages}}</a></li>

</ul>

Glimmer's static optimizations partially evaluate the template so that recomputing the entire template only needs to re-evaluate the three dynamic expressions in curlies. Since we can tell statically that the rest of the template can never change, Glimmer is able to avoid doing work that a Virtual DOM implementation would have to do.

I'll describe the details of this approach in another post, but the short version is that we compile templates into "append-time" opcodes for a bytecode VM. The process of running the "append-time program" produces the "updating program", which is then run every time the inputs change.

The compilation process boils down static content into static append-time opcodes, which don't add anything to the updating program.

This compares favorably to the Virtual DOM approach, which works by executing the entire "append-time program" (the user's render functions) each time, and uses a diffing strategy to avoid unnecessary updates to the DOM.

The Glimmer strategy, in contrast, uses the declarative nature of the templating syntax to separate out the static portions, which only need to run the first time, and the dynamic portions, which need to be executed every time the inputs change.

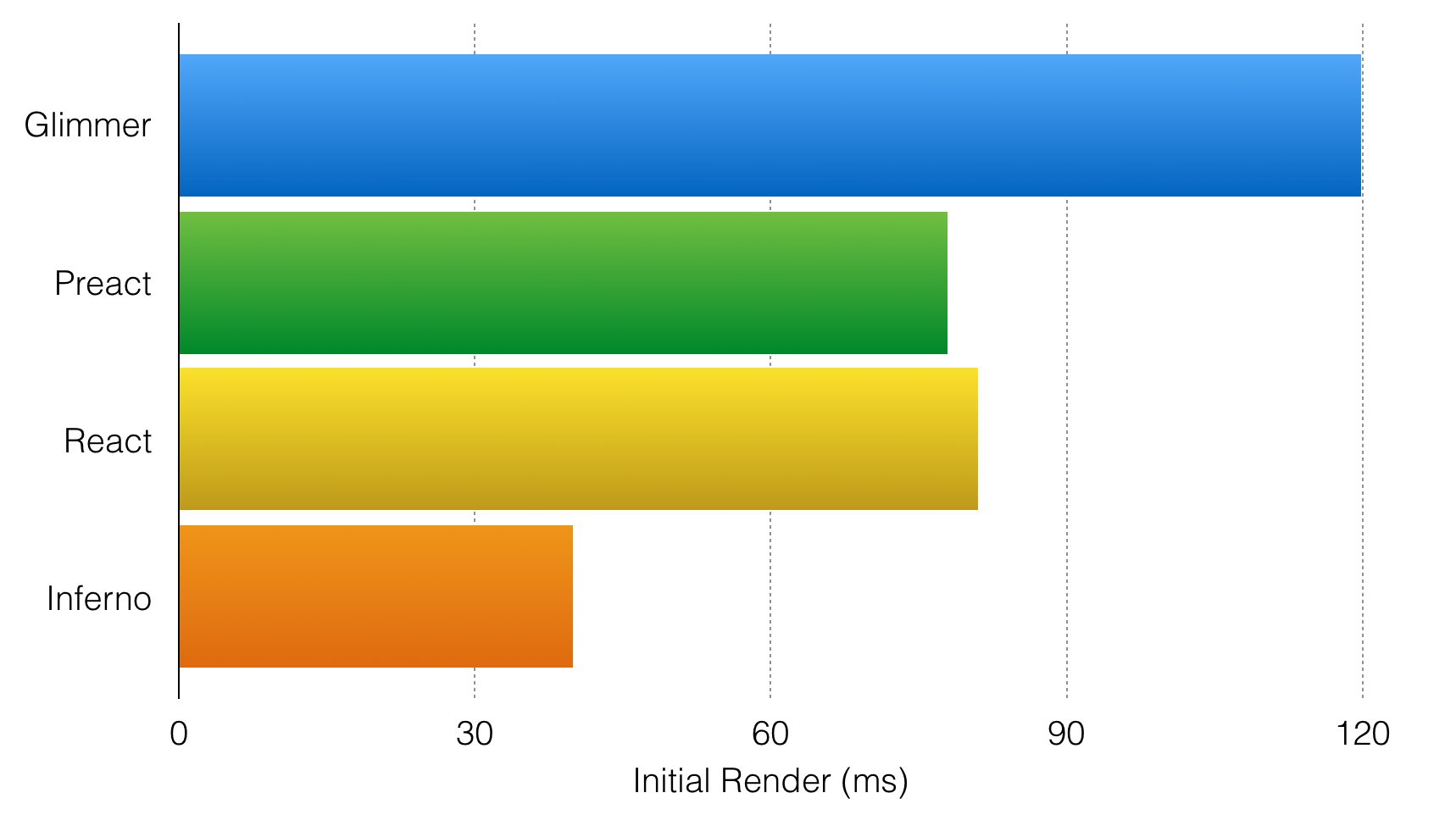

To compare how well these optimizations performed, we used the initial render and partial update benchmarks in the JS Frameworks Benchmark suite.

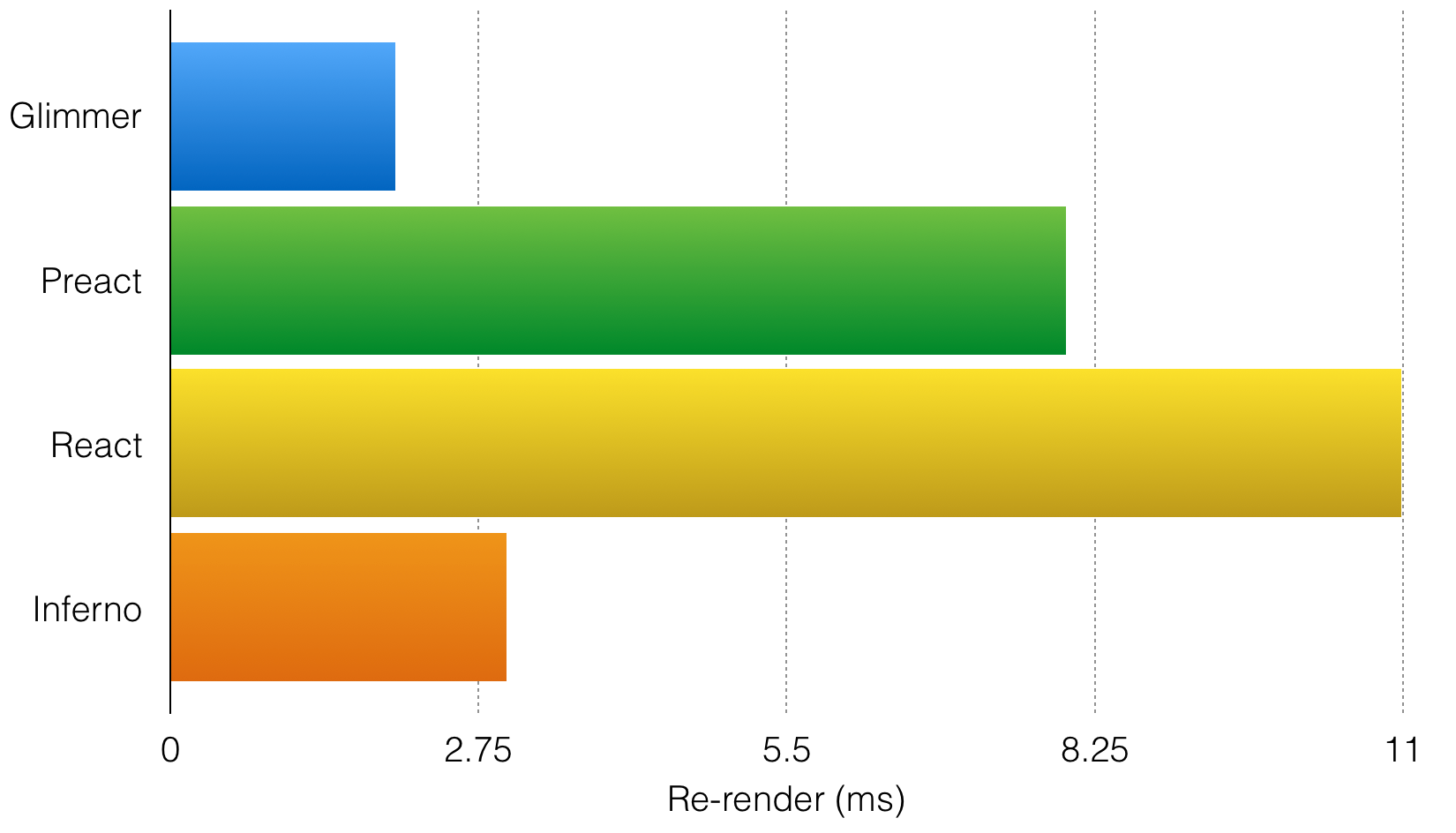

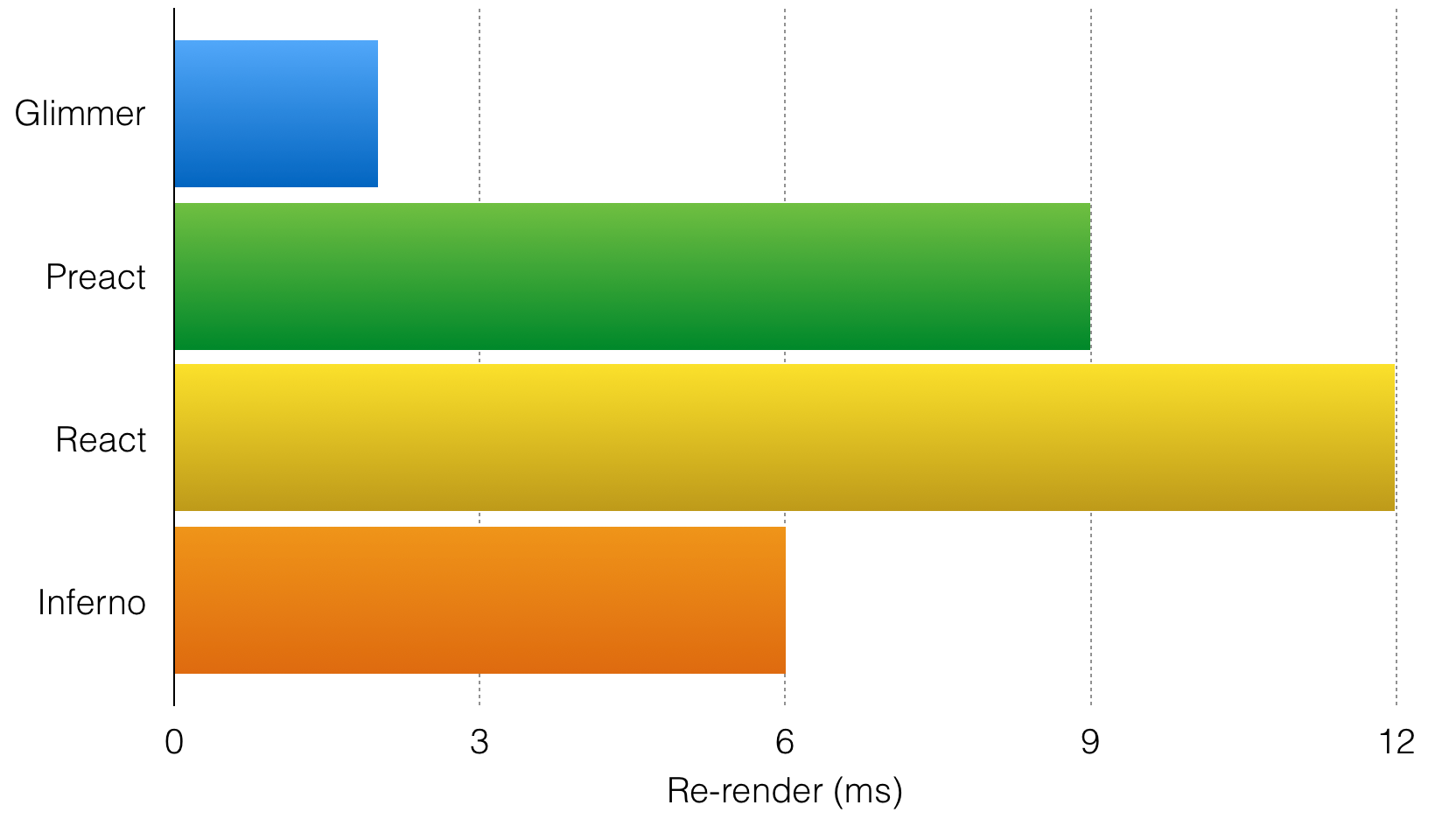

The vanilla version of the benchmark has very little static content. In this benchmark, Glimmer performs acceptably on initial render, and beats the most optimized Virtual DOM implementations on re-render.

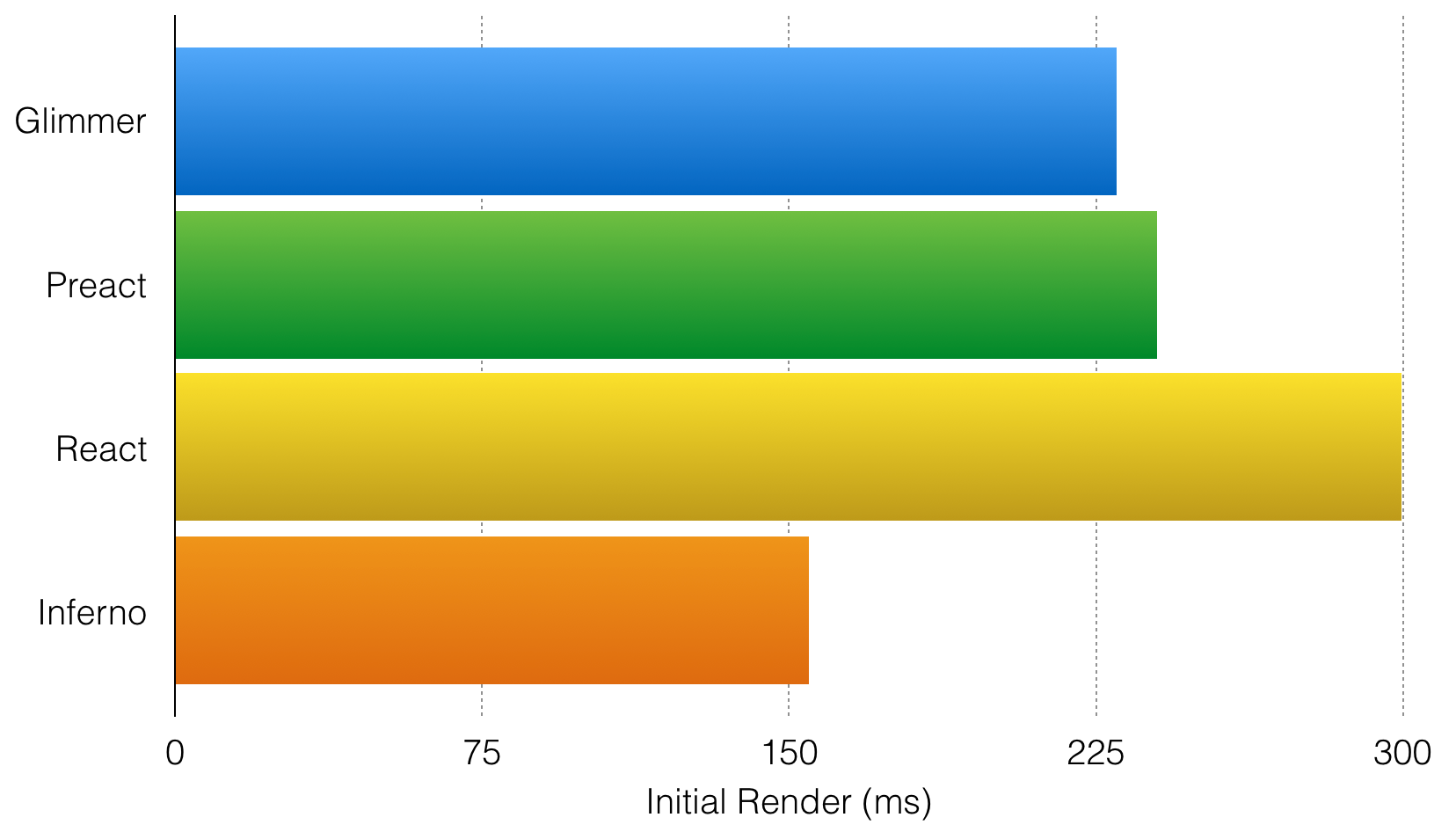

To test our hypothesis that Glimmer performs better with more static content, we adapted the benchmark to add some realistic static content into the mix (a media object from Bootstrap into each row). This... changed things.

We're now competitive with most Virtual DOM implementations on initial render, and our updating time hasn't budged. In contrast, even highly optimized Virtual DOM implementations have to do more work to skip over the static content.

While benchmarks don't focus much on static content, we've focused a lot of effort on real-world applications. By specializing static content in the Glimmer VM, we improve the relative performance of initial render, and by using partial evaluation, we avoid doing any work to revalidate static content when re-rendering.

Dynamic Optimizations

Our dynamic optimizations reduce the portion of the updating program that we have to run in practice.

We accomplish this by (under the hood) allowing every bit of data that goes into a template to separate the process of computing its current value from the process of determining whether the value might have changed.

This allows Glimmer to support a mix of different data management strategies like Redux, Mobx, and Ember's existing strategy of using .set for notification. It also allows us to experiment with new strategies like Glimmer.js' Mobx-inspired @tracked approach that developers can incrementally adopt as their application grows.

If you're familiar with Virtual DOM approaches like React, you've probably learned how to implement shouldComponentUpdate in your applications to improve performance hotspots.

Separating out values from validation in Glimmer gives you shouldComponentUpdate for free, and it works with many different kind of state management solutions.

When @tracked landed in Glimmer.js, the view-layer optimization that skips over unchanged subtrees (automatic shouldComponentUpdate) didn't need any changes: all of the work was done in the validation rules of @tracked properties.

This approach is what makes Glimmer perform so well in re-rendering benchmarks like the ones above.

When Glimmer 2 landed in Ember, we weren't surprised to see real-world apps improve their initial rendering performance, since that was the primary goal of Glimmer 2.

We were happy to also see improvements in updating performance compared to previous versions of Ember.

This is, in part, due to optimizations that we unlocked with the improved architecture of the underlying rendering system.

Explaining how Glimmer models values and validation, and how we use it to implement shouldComponentUpdate for you requires digging in to more technical detail, which I'll describe in a follow-up post.